Source – Towards Data Science

In the realm of artificial intelligence and computer vision, Convolutional Neural Networks (CNNs) stand as a groundbreaking innovation, revolutionizing the way machines perceive and analyze visual data. From image classification and object detection to facial recognition and medical imaging, CNNs have emerged as a cornerstone technology, driving advancements in various fields. In this comprehensive guide, we’ll explore the significance of convolutional neural networks, delve into their architecture and functioning, and highlight their transformative impact on image processing and beyond.

Understanding Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a class of deep neural networks specifically designed to process and analyze visual data, such as images and videos. Inspired by the structure and functioning of the human visual system, CNNs employ layers of interconnected neurons to extract features from input images, perform hierarchical abstraction, and make predictions based on learned patterns and relationships.

Key Components of Convolutional Neural Networks

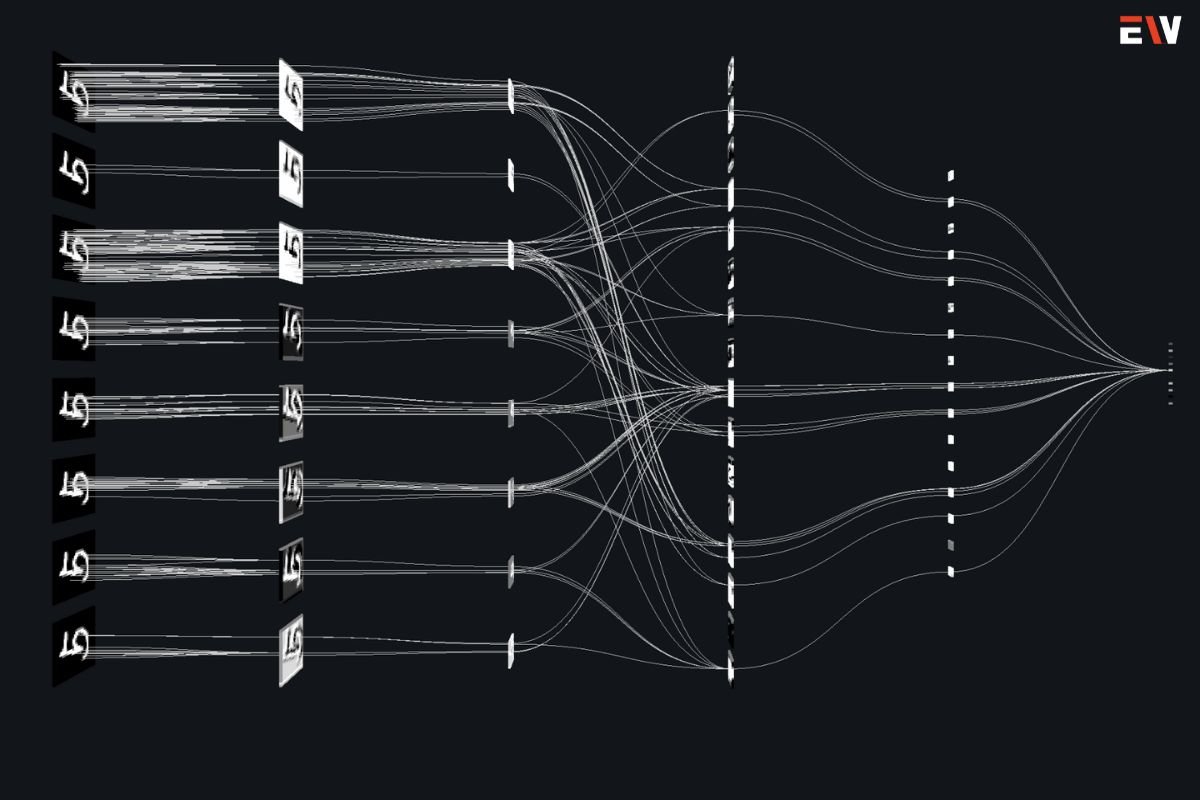

1. Convolutional Layers:

Convolutional layers are the core building blocks of CNNs, responsible for feature extraction through convolution operations. Each convolutional layer consists of a set of learnable filters or kernels, which slide across the input image, performing element-wise multiplications and aggregations to detect spatial patterns and features.

2. Pooling Layers:

Pooling layers are used to downsample the spatial dimensions of feature maps generated by convolutional layers, reducing computational complexity and improving model efficiency. Common pooling operations include max pooling and average pooling, which extract the most relevant information from feature maps while preserving spatial relationships.

3. Activation Functions:

Activation functions introduce non-linearities into the network, enabling CNNs to learn complex patterns and relationships in the input data. Popular activation functions used in CNNs include ReLU (Rectified Linear Unit), sigmoid, and tanh, which introduce non-linear transformations to the output of convolutional and pooling layers.

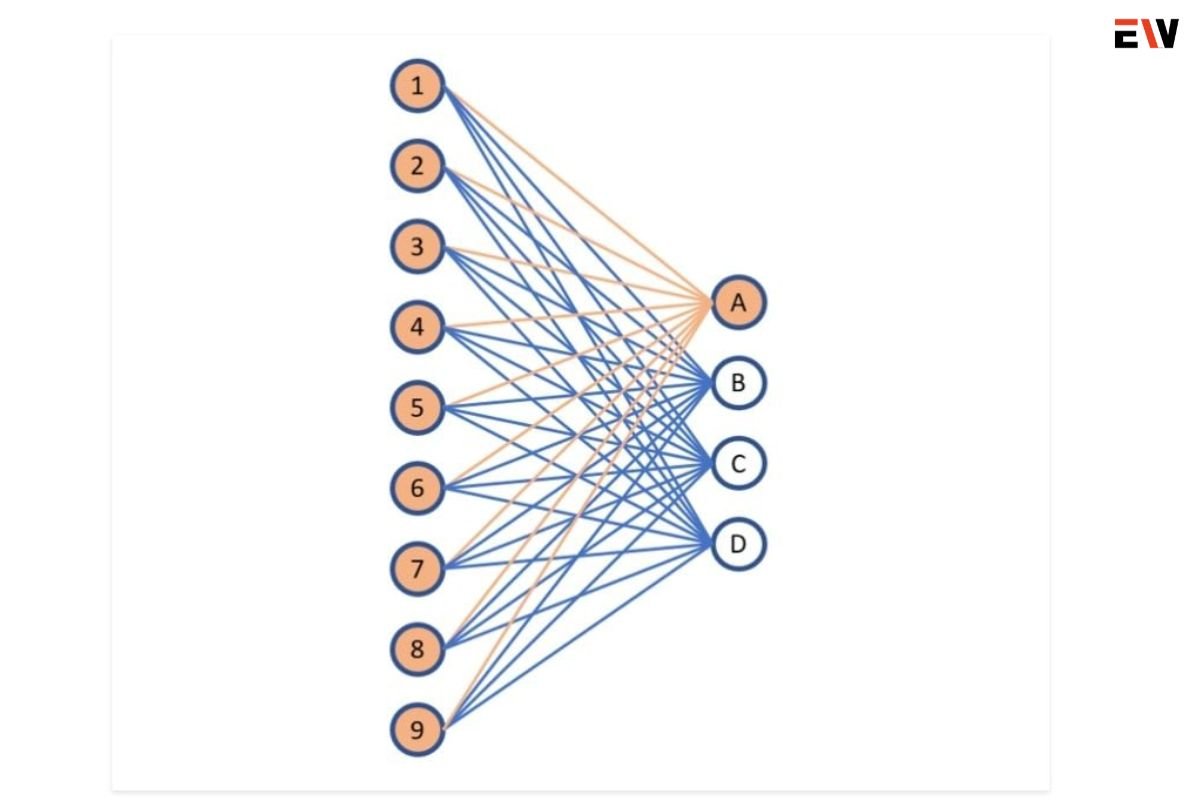

4. Fully Connected Layers:

Fully connected layers, also known as dense layers, are typically added at the end of a CNN architecture to perform classification or regression tasks based on the features extracted by earlier layers. These layers connect every neuron in one layer to every neuron in the next layer, allowing the network to make high-level predictions based on learned representations.

Transformative Impact of Convolutional Neural Networks

1. Image Classification:

CNNs have revolutionized image classification tasks, achieving state-of-the-art performance on benchmark datasets such as ImageNet. By learning hierarchical representations of visual features, CNNs can accurately classify images into predefined categories, enabling applications such as autonomous driving, medical diagnosis, and content-based image retrieval.

2. Object Detection and Localization:

CNNs excel at object detection and localization tasks, accurately identifying and localizing objects within images or video frames. By leveraging techniques such as region proposal networks (RPNs) and anchor-based detection, CNNs can detect multiple objects of interest within complex scenes, paving the way for applications in surveillance, robotics, and augmented reality.

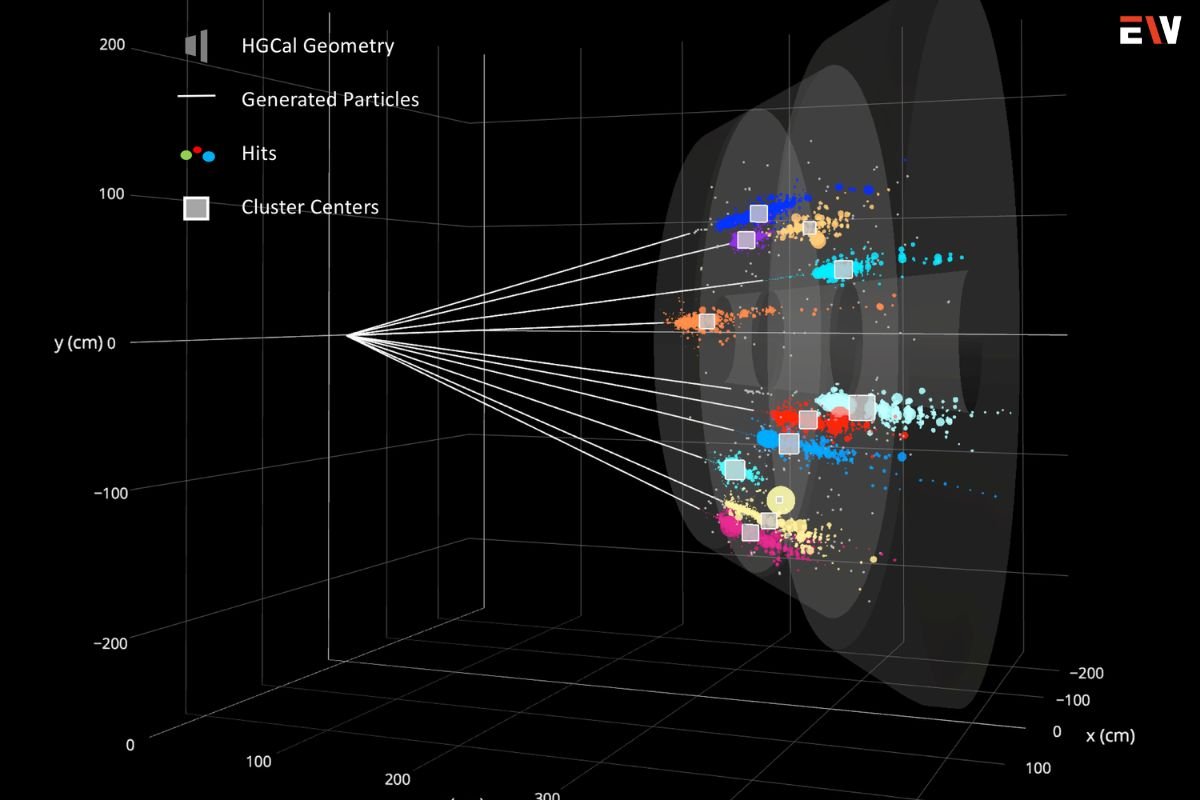

3. Semantic Segmentation:

Semantic segmentation involves partitioning an image into semantically meaningful regions and assigning a class label to each pixel. CNNs have demonstrated remarkable performance in semantic segmentation tasks, enabling applications such as autonomous navigation, medical image analysis, and environmental monitoring.

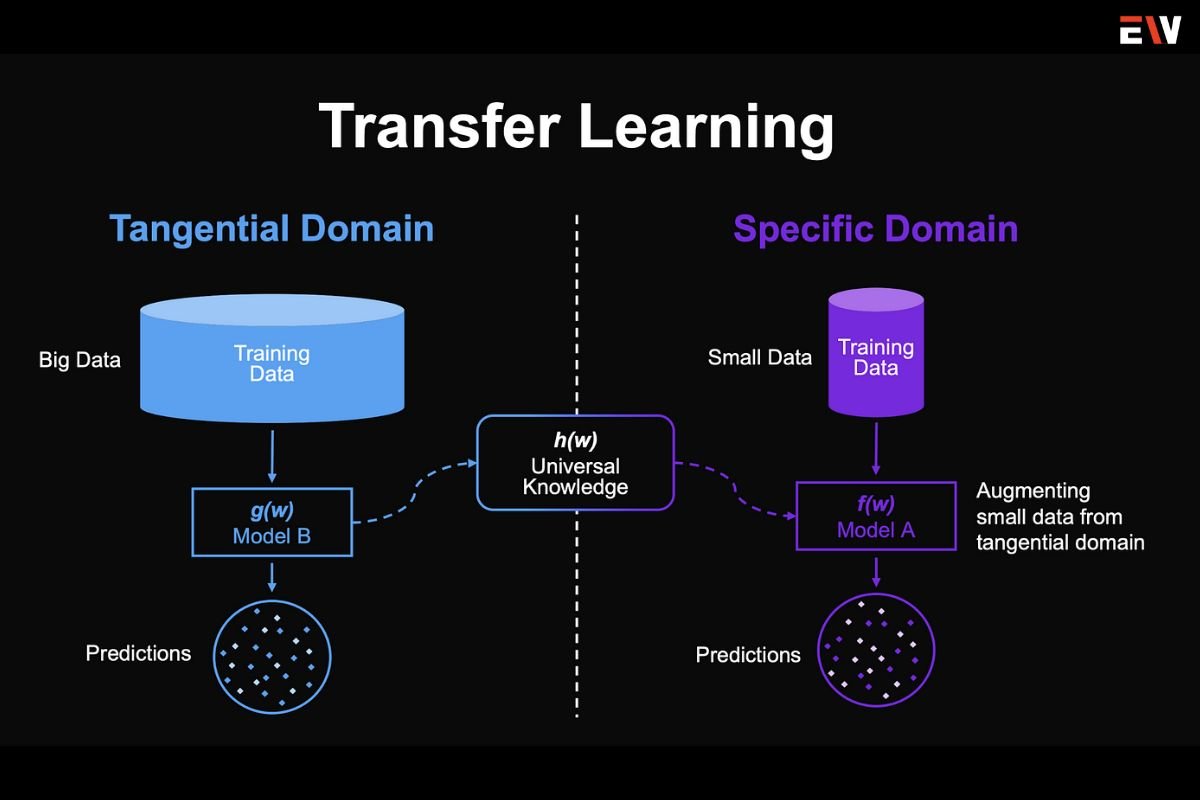

4. Transfer Learning and Domain Adaptation:

CNNs trained on large-scale datasets can be fine-tuned or adapted to new tasks and domains with relatively few labeled examples, thanks to transfer learning techniques. By leveraging pre-trained CNN models as feature extractors, researchers and practitioners can accelerate model development and achieve competitive performance on task-specific datasets.

Future Directions and Challenges

As CNNs continue to evolve, researchers are exploring novel architectures, optimization techniques, and applications to push the boundaries of what’s possible in image processing and computer vision. However, challenges such as data scarcity, robustness to adversarial attacks, and interpretability remain areas of active research and innovation, highlighting the need for continued collaboration and interdisciplinary efforts in the field.

Conclusion

Convolutional Neural Networks (CNNs) represent a paradigm shift in image processing and computer vision, enabling machines to perceive, interpret, and analyze visual information with unprecedented accuracy and efficiency. From image classification and object detection to semantic segmentation and beyond, CNNs have unlocked a wealth of possibilities across diverse domains, transforming industries and driving innovation at an unprecedented pace. As CNNs continue to advance and mature, their transformative impact on society, science, and technology will only continue to grow, paving the way for a future where intelligent machines seamlessly interact with the visual world around us.